Our State-of-the-Art Equipment

At Precision Analytics Group, we believe that delivering exceptional data-driven solutions requires more than just expertise – it demands cutting-edge tools and equipment. Our commitment to innovation is reflected in our investment in the latest technologies, ensuring that our team has the resources to tackle even the most complex analytical challenges for Chicago businesses. From high-performance computing clusters to advanced data visualization software, we equip ourselves to provide unparalleled insights and value to our clients. We continually upgrade our infrastructure to stay ahead of the curve and maintain our position as a leader in the data analytics industry in Chicago.

Hardware Infrastructure

High-Performance Computing Clusters

Our high-performance computing (HPC) clusters are the backbone of our analytical capabilities. These clusters consist of numerous interconnected servers working in parallel, allowing us to process vast datasets and perform complex simulations with remarkable speed and efficiency. The HPC infrastructure enables us to handle large-scale data analysis, statistical modeling, and machine learning tasks that would be impossible on standard desktop computers. The increased computational power drastically reduces processing times, allowing our analysts to iterate quickly and deliver results faster to our Chicago-based clients. The clusters are housed in a secure, climate-controlled data center with redundant power and network connections to ensure maximum uptime and reliability.

Data Storage Solutions

Efficient data storage is critical for managing the large volumes of information we handle. Our data storage solutions incorporate a multi-tiered approach, combining high-speed solid-state drives (SSDs) for frequently accessed data with large-capacity hard disk drives (HDDs) for archival storage. This hybrid system provides an optimal balance of speed, capacity, and cost. Furthermore, we utilize network-attached storage (NAS) and storage area network (SAN) technologies to ensure that our data is accessible to all members of our analytical team. Data redundancy and backup systems are in place to protect against data loss and ensure business continuity. We also adhere to strict data security protocols to protect sensitive information from unauthorized access.

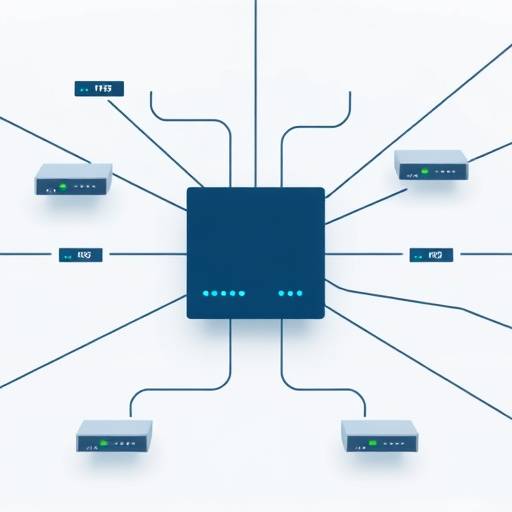

Network Infrastructure

A robust and reliable network infrastructure is essential for seamless data transfer and communication within our organization. We utilize high-bandwidth Ethernet connections and fiber optic cables to ensure fast and low-latency connectivity. Our network is designed with redundancy in mind, incorporating multiple network paths and backup routers to minimize the risk of network outages. We also implement network segmentation and firewalls to enhance security and prevent unauthorized access to sensitive data. Regular network monitoring and performance testing are conducted to identify and resolve potential issues proactively.

Software Tools and Applications

Advanced Data Visualization Software

Data visualization is a key component of our analytical services. We use industry-leading data visualization software such as Tableau, Power BI, and D3.js to create compelling and informative visualizations that help our clients understand complex data patterns and trends. These tools allow us to generate interactive dashboards, charts, and graphs that can be easily customized to meet specific business needs. Our data visualization experts work closely with clients to design visualizations that are both aesthetically pleasing and highly effective in communicating key insights. We provide training and support to help clients effectively use these tools to explore their own data.

Statistical Analysis Software

Our analysts utilize a wide range of statistical analysis software packages to perform advanced statistical modeling and data analysis. These include R, Python (with libraries such as Scikit-learn, Pandas, and NumPy), and SAS. These tools provide a comprehensive set of statistical functions and algorithms that enable us to perform tasks such as regression analysis, hypothesis testing, time series forecasting, and machine learning. We also develop custom algorithms and models tailored to the specific needs of our clients. Our statistical analysis experts have extensive experience in applying these tools to a variety of business problems.

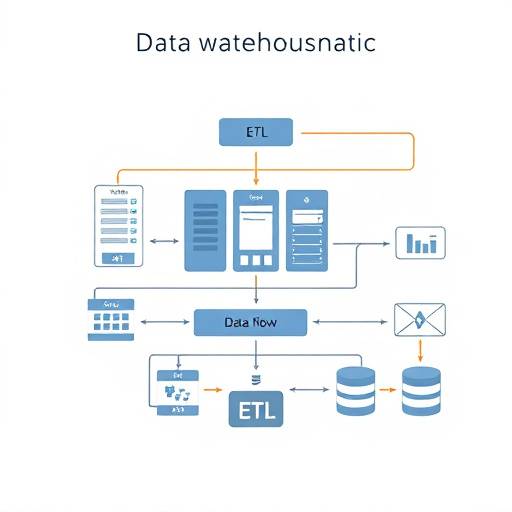

Data Warehousing and ETL Tools

Efficient data management is crucial for effective data analysis. We utilize data warehousing and ETL (Extract, Transform, Load) tools such as Apache Kafka, Apache Spark, and Informatica PowerCenter to streamline the process of collecting, cleaning, and integrating data from various sources. These tools allow us to build robust data warehouses that provide a centralized repository of data for analysis. Our ETL processes automate the transformation and loading of data, ensuring data quality and consistency. We also implement data governance policies and procedures to protect the integrity and security of our data.

Equipment Inventory

A comprehensive list of our equipment, including specifications and usage details, is maintained for internal auditing and resource management. Here's a brief overview:

| Equipment Type | Model/Version | Specification | Primary Usage |

|---|---|---|---|

| Server | Dell PowerEdge R740xd | Dual Intel Xeon Gold 6248R, 256GB RAM, 48TB Storage | HPC Cluster Nodes |

| GPU | NVIDIA Tesla V100 | 16GB HBM2 Memory | Machine Learning Model Training |

| Software | Tableau Desktop | Version 2024.2 | Data Visualization and Dashboarding |

| Software | RStudio | Version 2024.07.1 | Statistical Analysis and Modeling |

| Cloud Instance | AWS EC2 | m5.4xlarge (16 vCPUs, 64GB RAM) | Scalable Data Processing |

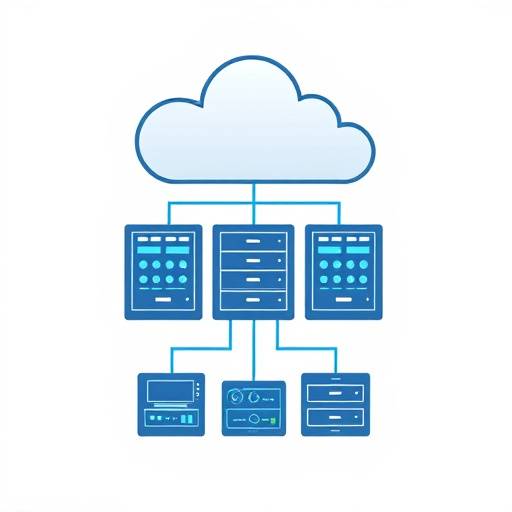

Cloud Computing Resources

Leveraging cloud computing platforms such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP), we provide scalable and secure data processing and storage solutions. Our cloud-based infrastructure ensures flexibility and reliability for all your analytical needs, offering on-demand access to resources and reducing the need for extensive on-premises hardware. This allows us to quickly adapt to changing project requirements and deliver cost-effective solutions.